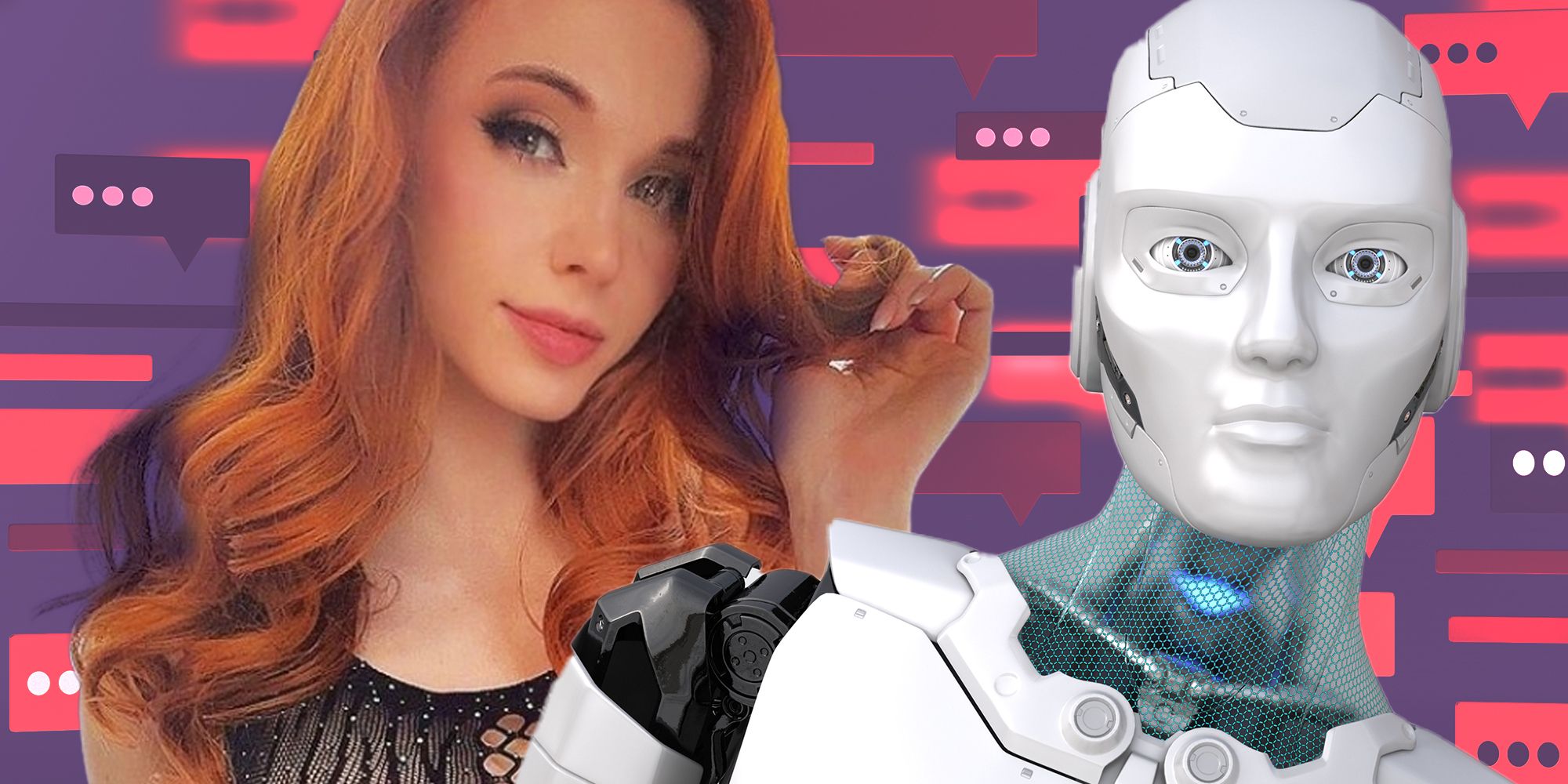

Earlier this week, Forever Voices AI announced the addition of Kaitlin “Amouranth” Siragusa as an AI companion on its Forever Companion platform, an artificial intelligence ‘companion’ that is available 24/7 for fans. The self-proclaimed ‘global leaders in turning influencers into AIs’ have previously launched the first ‘influencer turned AI girlfriend’, and now the popular streamer will be available for ‘virtual dates’ and ‘profound discussions’ through the two-way voice app.

In an interview with Bloomberg, founder and CEO John Meyer said that the company can “democratise access” to an influencer’s fanbase, giving more people the opportunity to talk to Amouranth. I don’t really begrudge Amouranth making her bag, and I think much of what appeals to people about buying her OnlyFans is the fact that you get to pay for her attention anyway. An AI app just lets people pretend they’re doing that, even though she’s not actually having a conversation with them, it’s just a robot doing an impression of her.

What I find disturbing is that this app is happily normalising parasocial relationships. I think the culture around streamers and, more broadly, celebrities is extremely toxic, and the idea that AI apps are profiting off of unhealthy dynamics rubs me the wrong way. Fans already think that their favourite Twitch streamers owe them personal details about their lives, and that they can buy attention from people. Many streamers, including Amouranth herself, hide details of their personal lives and whether they have romantic partners, because fans finding out that the object of their one-sided affection is already in a relationship and that they don’t have a chance (as if they did, anyway) can lead to outpourings of hate and people saying they feel “scammed”. Building a business around fans who want to have access to their favourite influencer all the time just enables this dynamic, and could foster very unhealthy parasocial relationships, making people think that they ‘know’ her. It doesn’t help that because it’s an app, it could disappear at any time.

Forever Voices AI says it’s counteracting this with “procedures that have automatic detection of a variety of situations from mental health situations” and that “the AI will actually in real-time either slow the conversation down if a conversation is going too long or if it appears someone might be getting, let’s say, addicted”. The app also has a mental health engine to detect mental health issues to connect them, in real-time, with human therapists and human-run emergency hotlines if needed. How exactly this will be implemented is unclear, but the fact is that it seems the company is very aware of the implications of this technology and the real-world impacts it could have on users - yet it’s just going to go ahead, anyway.

People call me a luddite all the time for criticising artificial intelligence, but it’s reductive to say that any criticisms of new technology can be linked to fear. My concern has always been that tech is being implemented without enough research into how it impacts its users, and that companies are more interested in profit than the ethical implications of their products. Of course, making an AI app where you can talk to influencers is a good idea for the company, because it’s exploiting a societal flaw to make money. But it’s not a good idea for us. The whole thing is skeevy, and I worry about the implications of this technology on an already misogynistic streaming industry. But who cares - you can have ‘steamy gaming sessions’ with an Amouranth bot, and that’s worth it, right?